Via the OpenJUMP list comes this notice of the OGRS 2009: International Opensource Geospatial Research Symposium conference in Nantes, France.

France and Australia in one year sounds like a pretty good training plan to me!

Monday, 15 December 2008

Friday, 28 November 2008

KML Pie Charts in JEQL

Pie Charts are a nice way of displaying thematic visualization in Google Earth. They're also a good test of the chops of a KML generator. So naturally I was keen to see how to produce pie charts with JEQL.

For simplicity I decided to make my test statistic an orthographic comparison of the names of countries, showing the relative length of the country names and their vowel/consonant distribution. (This wasn't because this is a particularly interesting statistic, but it uses easily available data and exercises some of the data processing capabilities of JEQL).

The solution ended up using lots of existing capabilities, such as splitting multigeometries, regular expressions, JTS functions such as interior point, boundary and distance, and of course generating KML with extrusions and styling. The only new function I had to add was one to generate elliptical arc polygons - which is a good thing to have.

The results look pretty snazzy, I think - and would be even better with more meaningful data!

For simplicity I decided to make my test statistic an orthographic comparison of the names of countries, showing the relative length of the country names and their vowel/consonant distribution. (This wasn't because this is a particularly interesting statistic, but it uses easily available data and exercises some of the data processing capabilities of JEQL).

The solution ended up using lots of existing capabilities, such as splitting multigeometries, regular expressions, JTS functions such as interior point, boundary and distance, and of course generating KML with extrusions and styling. The only new function I had to add was one to generate elliptical arc polygons - which is a good thing to have.

The results look pretty snazzy, I think - and would be even better with more meaningful data!

Tuesday, 4 November 2008

Tuesday, 21 October 2008

Nostalgic Trivia

When I was but a wee nerdling, I took a course taught by a grizzled veteran of the computer industry. I can no longer remember the subject matter of the course, but I do remember that at one point he referred to the main players in the computer business as "IBM and D'BUNCH". D'BUNCH were DEC, Burroughs, Univac, NCR, Control Data, and Honeywell. (And yes, I had to resort to Wikipedia to remember all these names.)

The moral of the story? Computer manufacturers come and go, but IBM remaineth eternal, apparently.

Dating myself even more, in the early part of my career I used machines made by the first 3 of these. The Univac had the distinction of having the most obtuse, unwieldy, difficult-to-use OS I have ever encountered. DEC, in contrast, had the best OS (of proprietary ones, that is - *nix blows 'em all away).

The moral of the story? Computer manufacturers come and go, but IBM remaineth eternal, apparently.

Dating myself even more, in the early part of my career I used machines made by the first 3 of these. The Univac had the distinction of having the most obtuse, unwieldy, difficult-to-use OS I have ever encountered. DEC, in contrast, had the best OS (of proprietary ones, that is - *nix blows 'em all away).

Monday, 20 October 2008

Untangling REST

Thanks to Sean I just learned about Roy Fielding's blog.

I don't know why it never occurred to me that Roy Fielding would have a blog, and it would likely to be a good source of commentary on the evolving philosophy of the Web, but it didn't. He does, and sure enough it's chock full o' goodness.

Highlights include this post about how to efficiently build a RESTful Internet-scale event notification system for querying Flickr photo update events - using images as bitmap indexes of event timeslices! Or the post which Sean noted, along with this one - both cri-de-coeurs about the pain of seing the clean concept of REST muddied to the point of meaningless.

Anyone confused about REST would do well to avoid inhaling too much smoke and get a dose of fresh air from the source...

I don't know why it never occurred to me that Roy Fielding would have a blog, and it would likely to be a good source of commentary on the evolving philosophy of the Web, but it didn't. He does, and sure enough it's chock full o' goodness.

Highlights include this post about how to efficiently build a RESTful Internet-scale event notification system for querying Flickr photo update events - using images as bitmap indexes of event timeslices! Or the post which Sean noted, along with this one - both cri-de-coeurs about the pain of seing the clean concept of REST muddied to the point of meaningless.

Anyone confused about REST would do well to avoid inhaling too much smoke and get a dose of fresh air from the source...

Tuesday, 7 October 2008

Improvements to JTS buffering

By far the most difficult code in JTS to develop has been the buffer algorithm. It took a lot of hard graft of thinking, coding and testing to achieve a solid level of robustness and functionality. There have been a couple of iterations of improvements since the first version shipped, but the main features of the algorithm have been pretty stable.

However, it was always clear that the performance and memory-usage characteristics left something to be desired. This is particularly evident in cases which involve large buffer distances and/or complex geometry. These shortcomings are even more apparent in GEOS, which is less efficient at computation involving large amounts of memory allocation.

I'm pleased to say that after a few years of gestating ideas (really! - at least on and off) about how to improve the buffer algorithm, I've finally been able to implement some enhancements which address these problems. In fact, they provide dramatically better performance in the above situations. As an example, here are timing comparisons between JTS 1.9 and the new code (the input data is 50 polygons for African countries, in lat-long):

Another tester reports that a buffering task which took 83 sec with JTS 1.9 now takes 2 sec with the new code.

But wait, there's more! In addition to the much better performance of the new algorithm, the timings reveal a further benefit - once the buffer distance gets over a certain size (relative to the input), the execution time actually gets faster. (In fact, this is as it should be - as buffer distances get very large, the shape of the input geometry has less and less effect on the shape of the buffer curve.)

This code will appear in JTS 1.10 Real Soon Now.

However, it was always clear that the performance and memory-usage characteristics left something to be desired. This is particularly evident in cases which involve large buffer distances and/or complex geometry. These shortcomings are even more apparent in GEOS, which is less efficient at computation involving large amounts of memory allocation.

I'm pleased to say that after a few years of gestating ideas (really! - at least on and off) about how to improve the buffer algorithm, I've finally been able to implement some enhancements which address these problems. In fact, they provide dramatically better performance in the above situations. As an example, here are timing comparisons between JTS 1.9 and the new code (the input data is 50 polygons for African countries, in lat-long):

Buffer

Distance JTS 1.9 JTS 1.10

0.01 359 ms 406 ms

0.1 1094 ms 594 ms

1.0 16.453 s 2484 ms

10.0 217.578 s 3656 ms

100.0 728.297 s 250 ms

1000.0 1661.109 s 313 ms

Another tester reports that a buffering task which took 83 sec with JTS 1.9 now takes 2 sec with the new code.

But wait, there's more! In addition to the much better performance of the new algorithm, the timings reveal a further benefit - once the buffer distance gets over a certain size (relative to the input), the execution time actually gets faster. (In fact, this is as it should be - as buffer distances get very large, the shape of the input geometry has less and less effect on the shape of the buffer curve.)

The algorithm improvements which have made such a difference are:

- Improved offset curve geometry - to avoid some nasty issues arising from arc discretization, the original buffer code used some fairly conservative heuristics. These have been fine-tuned to produce a curve which allows more efficent computation, while still maintaining fidelity of the buffer result

- Simplification of input - for large buffer distances, small concavities in the input geometry don't affect the resulting buffer to a significant degree. Removing these in a way which preserves buffer distance accuracy (within tolerance) gives a big improvement in performance.

This code will appear in JTS 1.10 Real Soon Now.

Friday, 22 August 2008

Java Power Tools cuts straight and true

Part of my summer holiday reading is the book Java Power Tools, by John Ferguson Smart.

I count a computer book a good buy if I get one new idea from it; two is stellar; and three or more goes on my "Recommend to Colleagues" list. This one is on the list... Ideas I`ve picked up include:

- the XMLTask extension for Ant, that provides easy and powerful editing of XML files. This should make configuring things like web.xml and struts-config.xml a lot easier. It even provides a way to uncomment blocks of XML markup.

- SchemaSpy, which generates database documentation (including ER diagrams!) from JDBC metadata. The tool also also comes with profiles for interpreting some vendor-specific metadata. It will be interesting to see how it handles spatial datatypes in Oracle and PostGIS...

- using Doxygen to generate documentation for Java source. Doxygen provides more capabilities than Javadoc, including UML diagrams and a variety of output document formats.

- UMLGraph also allows generating UML diagrams from Java source, and embedding them directly in Javadoc.

For graph visualization, SchemaSpy, Doxygen and UMLGraph all use the GraphViz application. This looks like a great tool in its own right. It provides a DSL for specifying graph structures and node and edge symbology, along with a layout and rendering engine which outputs to numerous different formats.

JPT of course covers all the better-known tools such as Ant, Maven, CVS, SVN, JUnit, Bugzilla, Trac, and many others. It doesn`t replace the documentation for these tools, but it does give a good comparative overview and enough details to help you decide which ones you`re going to strap around your waist for the next project.

Friday, 15 August 2008

Be the most popular tile on your block

You might think that the image below is a map of North America population density. You'd be off by one level of indirection...

In fact it's a heat map of the access frequency for Virtual Earth map tiles.

So really it's not an image of where people are, but where they want to be...

So really it's not an image of where people are, but where they want to be...

The image comes from a paper out of Microsoft Research: How We Watch the City: Popularity and Online Maps, by Danyel Fisher.

Makes me wonder if there are tiles that have never been accessed. Like perhaps this one? (It took a looong time to render....)

And what is the most-accessed tile? This one, perhaps?

In fact it's a heat map of the access frequency for Virtual Earth map tiles.

So really it's not an image of where people are, but where they want to be...

So really it's not an image of where people are, but where they want to be...The image comes from a paper out of Microsoft Research: How We Watch the City: Popularity and Online Maps, by Danyel Fisher.

Makes me wonder if there are tiles that have never been accessed. Like perhaps this one? (It took a looong time to render....)

And what is the most-accessed tile? This one, perhaps?

Thursday, 14 August 2008

Revolution is Happening Now

This great graph shows why we're all going to wrassling with parellelization for the rest of our coding lives:

Source: Challenges and Strategies for High End Computing, Kathy Yelick

Source: Challenges and Strategies for High End Computing, Kathy Yelick

Also see this course outline for an sobering/inspiring view of where computation is headed.

Source: Challenges and Strategies for High End Computing, Kathy Yelick

Source: Challenges and Strategies for High End Computing, Kathy YelickAlso see this course outline for an sobering/inspiring view of where computation is headed.

Monday, 4 August 2008

Krusty kurmudgeon Knuth kans kores & kommon kode

Andrew Binstock has an interesting interview with Don Knuth.

Andrew Binstock has an interesting interview with Don Knuth.Professor Knuth makes a few surprising comments, including a low opinion of the current trend towards multicore architectures. Knuth says:

To me, it looks more or less like the hardware designers have run out of ideas, and that they’re trying to pass the blame for the future demise of Moore’s Law to the software writers...But then he goes on to admit:

I haven’t got many bright ideas about what I wish hardware designers would provide instead of multicores...Which seems to me to make his complaint irrelevant, at best. (Not that I don't sympathize with his frustration about banging our heads against the ceiling of sequential processing speed. And as the sage of combinatorial algorithms he must be more aware than most of us about the difficulties of taking advantage of concurrency.)

Another egregious Knutherly opinion is that he is "biased against the current fashion for reusable code". He prefers what he calls "re-editable code". My thought is that open source gives you both options, and personally I am quite happy to reuse, say, the Java API rather than rewriting it. But I guess when most of your code is developed in your own personal machine architecture (Knuth's MIX) then it's a good thing to enjoy rewriting code!

In the end, however, I have to respect the opinions of a man who has written more lines of code and analyzed more algorithms than most of us have had hot dinners. And I still place him in the upper levels of the pantheon of computer science, whose books all programmers would like to have seen gracing their shelves - even if most of us will never read them!

And yes, this post is mostly an excuse for some korny alliteration - but the interview is still worth a read. (Binstock's blog is worth scanning too - he has some very useful posts on aspects of Java development.)

Monday, 21 July 2008

Quote of the Day

Love him or hate, you have to admit that John Dvorak gives good copy:

Vista is essentially the old hooker with a bad facelift and too much makeup. She also can't remember her customers

Vista is essentially the old hooker with a bad facelift and too much makeup. She also can't remember her customers

Tuesday, 24 June 2008

Database design tips for massively-scalable apps

Here's an interesting post on design practices for building massively-scalable apps on database infrastructure such as Google BigTable.

The takeaway: this ain't your granpappy's old relational database system, so throw out everything he taught you. Denormalize. Prefer big fluffy things to small granular things. Don't bother with DB constraints - enforce the model in the application. Prefer small frequent updates to large page updates.

The good news (or bad, depending on how fed up you are with your local DBA) - don't bother with all this unless you intend to scale to millions of users.

The takeaway: this ain't your granpappy's old relational database system, so throw out everything he taught you. Denormalize. Prefer big fluffy things to small granular things. Don't bother with DB constraints - enforce the model in the application. Prefer small frequent updates to large page updates.

The good news (or bad, depending on how fed up you are with your local DBA) - don't bother with all this unless you intend to scale to millions of users.

Monday, 23 June 2008

GeoSVG, anyone?

The GeoPDF format seems to be gaining traction these days. I have to admit, when I first heard of this technology I had the same reaction as James Fee - "What's it good for"? But I'm coming round... A live map with rich information content and a true geospatial coordinate system - what's not to like?

My excuse for such scepticism is my finely-honed technical bullsh*t reflex, which uses the logic of "If this is such a good, obvious, simple idea then why hasn't it been implemented ages ago?".

To be fair, there have been lots of SVG mapping demos, which fill the same use case and provide equivalent functionality. Sadly that concept hasn't really caught fire, though (perhaps due to the ongoing SVG "always a bridesmaid, never a bride" conundrum).

Of course, an idea this good is really too important to be bottled up in the murky world of proprietary technology. It seems like this area is ripe for an open standard. SVG is the obvious candidate for the spatial content (sorry, GeoJSON). What it needs is some standards around modelling geospatial coordinate systems and encoding layers of features. It seems like this should be quite doable. The goal would be to standardize the document format so that viewers could easily be developed (either stand-alone, as modules of existing viewers, or as browser-hosted apps). Also, the feature data should be easy to extract from the data file, for use in other applications.

Perhaps there's already an initiative like this out there - if so, I'd love to hear about it.

My excuse for such scepticism is my finely-honed technical bullsh*t reflex, which uses the logic of "If this is such a good, obvious, simple idea then why hasn't it been implemented ages ago?".

To be fair, there have been lots of SVG mapping demos, which fill the same use case and provide equivalent functionality. Sadly that concept hasn't really caught fire, though (perhaps due to the ongoing SVG "always a bridesmaid, never a bride" conundrum).

Of course, an idea this good is really too important to be bottled up in the murky world of proprietary technology. It seems like this area is ripe for an open standard. SVG is the obvious candidate for the spatial content (sorry, GeoJSON). What it needs is some standards around modelling geospatial coordinate systems and encoding layers of features. It seems like this should be quite doable. The goal would be to standardize the document format so that viewers could easily be developed (either stand-alone, as modules of existing viewers, or as browser-hosted apps). Also, the feature data should be easy to extract from the data file, for use in other applications.

Perhaps there's already an initiative like this out there - if so, I'd love to hear about it.

Monday, 2 June 2008

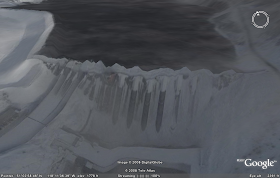

B.C. winter causes frost buckling in Google Earth

The Google Earth imagery of Revelstoke Dam in B.C. (below) looks like it was taken in the depths of winter. Since this is a massive concrete dam, I'm guessing the frost buckling in the image is an artifact of the surface model. Maybe Google need to add some more antifreeze to their TIN algorithm... or perhaps light a twig fire under their rendering engine?

Thursday, 1 May 2008

Database Architecture monograph

In the era of cloud computing, map/reduce, dynamic languages and the semantic web, the stalwart Relational Database Management System is looking a bit fusty. But RDBMSes were perhaps the earliest widely-deployed example of many of the techniques of distributed computing, concurrent programming, and query optimization that are still highly relevant.

Hellerstein, Stonebraker and Hamilton have published a monograph on Architecture of a Database System. With names like that involved you'd expect high quality and some deep insight, and the article delivers. It's a good, accesible summary of the state-of-the-art in RDBMS technology.

Hellerstein, Stonebraker and Hamilton have published a monograph on Architecture of a Database System. With names like that involved you'd expect high quality and some deep insight, and the article delivers. It's a good, accesible summary of the state-of-the-art in RDBMS technology.

JAQL pegs the cool technology mashup meter

JAQL is a query language and engine which has XQuery-like syntax, SQL-like operators, JSON as a native data format, and runs using the Hadoop ma/preduce framework. (Although not mentioned explicitly, it's probably great for social networking as well...)

You might think that JAQL and JEQL were separated at birth, but they actually have no genetic material in common. But it's interesting to see the J*QL acronym space being rapidly populated. The best two vowels are now gone - who's going to be next to pile in?

You might think that JAQL and JEQL were separated at birth, but they actually have no genetic material in common. But it's interesting to see the J*QL acronym space being rapidly populated. The best two vowels are now gone - who's going to be next to pile in?

Wednesday, 30 April 2008

Tuesday, 29 April 2008

Ted Nedward takes on the Tower of Babel

Here's another (as usual) fascinating, detailed, doesn't-this-guy-work-for-a-living post from Ted Nedward. This one starts as a meta-critique of Groovy VS Ruby and morphs into an interesting summary of what the Tower Of Babel IT department is using this year.

Money quote:

I wish I could get back to [C++]for a project in the same way that guys fantasize about running into an old high school girlfriend on a business trip.

Personally, my reaction to my old C++ girlfriend would be "TG I didn't get hitched to this chick - she's way too high-maintenance". Although Ted says she's changed...

Money quote:

I wish I could get back to [C++]for a project in the same way that guys fantasize about running into an old high school girlfriend on a business trip.

Personally, my reaction to my old C++ girlfriend would be "TG I didn't get hitched to this chick - she's way too high-maintenance". Although Ted says she's changed...

Sunday, 20 April 2008

Microsoft's MVP's use... Google?

An article about a candid presentation by Steve Ballmer.

The XP-versus-Vista debacle just reinforces the core value of Open Software. Current version tested, deployed, and working fine? Then nobody can force you to upgrade...

The XP-versus-Vista debacle just reinforces the core value of Open Software. Current version tested, deployed, and working fine? Then nobody can force you to upgrade...

Friday, 18 April 2008

Who's conspicuously absent from the PaaS fray?

Here's a hint: who was using the slogan "The Network is the Computer" 10 years ago? And who was the first company to deliver a RIA technology?

So why have they been MIA in the PaaS goldrush?

Let's think about this another way. What database are people most likely to run on their slice -o'Linux-in-the-cloud? mySQL perhaps? Which was just bought by...?

The Register has an article about a possible JavaOne announcement about how this situation might change (with a leaked slide presentation! Fell off the back of an ftp packet, I guess..)

(The weird thing is is that the presentation talks only about PostgreSQL. An old file? Or a different corporate camp? Didn't get the memo maybe?)

So why have they been MIA in the PaaS goldrush?

Let's think about this another way. What database are people most likely to run on their slice -o'Linux-in-the-cloud? mySQL perhaps? Which was just bought by...?

The Register has an article about a possible JavaOne announcement about how this situation might change (with a leaked slide presentation! Fell off the back of an ftp packet, I guess..)

(The weird thing is is that the presentation talks only about PostgreSQL. An old file? Or a different corporate camp? Didn't get the memo maybe?)

KML Craziness

Why oh why did KML choose to specify colour values as AGBR ABGR rather than RGBA? Does anyone have a rational explanation for this anomaly?

Tuesday, 15 April 2008

Is that cloud on the horizon going to start raining applications?

Timothy O'Brien speculates that the transition to cloud-based computing is happening sooner than expected. He's talking about the new integration between Salesforce.com (which is apparently the poster child for SaaS) and Google Apps (the poster child for desktop replacement by the Web). And he generalizes this to include EC2, SimpleDB, and the "twenty or thirty other companies that are going to join the industry".

He also warns here that this transition could transform the model for software development in ways uncomfortable for IT professionals.

He could be right. Cloud computing does seem to be poised to finally provide the right platform to suck the juice out of corporate data centres. The idea of virtual everything certainly has an appeal (especially to someone like me who is basically a software guy).

But questions occur... Salesforce and Google seem like a perfect match - but what about the other companies that want a piece of this action? Does it matter that you will have to commit everything to a given cloud platform? And what happens if that platform goes away? The more advantage you take of the cloud, the bigger the pain when it disappears. And what about apps which are a bit more specific than CRM (which in my naive view seems like just a fancy Contacts list - and hence an obvious and easy thing to integrate with an office suite).

Tim would probably call these kinds of questions "self-interested observations from one with the most to lose". He mentions a Salesforce meeting where business types applaud a sign showing "Software" with a big red slash through it... Well, maybe. Last I noticed no-one has quite managed to automate generating code from requirements documents (let alone automating the generation of implementable requirments documents out of people's heads 8^). So I would say it's more like "different software" than "no software".

One thing's for sure.. there's going to be some gigantic platform turf wars going on up there in the stratosphere.

(One big disappointment - it sounds like the Salesforce platform is based on their proprietary Apex language. Ugh. Just what the world needs - one more language to debate over. At least Google App Engine picked a real language for their launch!)

He also warns here that this transition could transform the model for software development in ways uncomfortable for IT professionals.

He could be right. Cloud computing does seem to be poised to finally provide the right platform to suck the juice out of corporate data centres. The idea of virtual everything certainly has an appeal (especially to someone like me who is basically a software guy).

But questions occur... Salesforce and Google seem like a perfect match - but what about the other companies that want a piece of this action? Does it matter that you will have to commit everything to a given cloud platform? And what happens if that platform goes away? The more advantage you take of the cloud, the bigger the pain when it disappears. And what about apps which are a bit more specific than CRM (which in my naive view seems like just a fancy Contacts list - and hence an obvious and easy thing to integrate with an office suite).

Tim would probably call these kinds of questions "self-interested observations from one with the most to lose". He mentions a Salesforce meeting where business types applaud a sign showing "Software" with a big red slash through it... Well, maybe. Last I noticed no-one has quite managed to automate generating code from requirements documents (let alone automating the generation of implementable requirments documents out of people's heads 8^). So I would say it's more like "different software" than "no software".

One thing's for sure.. there's going to be some gigantic platform turf wars going on up there in the stratosphere.

(One big disappointment - it sounds like the Salesforce platform is based on their proprietary Apex language. Ugh. Just what the world needs - one more language to debate over. At least Google App Engine picked a real language for their launch!)

Friday, 4 April 2008

Ontogeny recapitulates Phylogeny in the life of a programmer

(C'mon, admit it - you've always wanted to use that as the title of a blog post too...)

Paul's epiphany seems like the equivalent of Ontogeny recapitulating Phylogeny in the evolution of a programmer. "Hey, C has arrays! Hey, C arrays are really just syntactic sugar for pointer dereferencing! Hey, I can index to anywhere in memory really easily! Hey, I can store anywh...."

SEGFAULT - CORE DUMPED

Sh*t.

"Hey, there's this new language called Java! And it has arrays too! Hey, if I index past the end of an array I get a nice error message telling me exactly where in my code the problem occurred! Hey, I think I can knock off work early and go to the pub!"

8^)

Paul's epiphany seems like the equivalent of Ontogeny recapitulating Phylogeny in the evolution of a programmer. "Hey, C has arrays! Hey, C arrays are really just syntactic sugar for pointer dereferencing! Hey, I can index to anywhere in memory really easily! Hey, I can store anywh...."

SEGFAULT - CORE DUMPED

Sh*t.

"Hey, there's this new language called Java! And it has arrays too! Hey, if I index past the end of an array I get a nice error message telling me exactly where in my code the problem occurred! Hey, I think I can knock off work early and go to the pub!"

8^)

Friday, 28 March 2008

JTS at the sharp end of many arrows

Miguel Montesinos and Jorge Sanz from the gvSIG project have made a nice diagram showing the relationships between a bunch of GFOSS projects. It' s nice to see JTS close to the centre of the diagram - although having so many arrows pointed at it makes me a little nervous!

They show JTS having a dependency on Batik for some reason - that must be an error, there's no relationship between the two. Or does Batik use JTS?

It would have been nice to see a "parent-of" relationship between JTS and GEOS and NTS, since the latter two also form a key component of quite a few projects. And I'm pretty sure MapGuide OS uses GEOS, as does OGR.

But it's not surprising there's a few errors and omissions - it's a pretty complicated web of relationships. And this is over only what - 8 years? I wonder what the diagram will look like in another 8...

They show JTS having a dependency on Batik for some reason - that must be an error, there's no relationship between the two. Or does Batik use JTS?

It would have been nice to see a "parent-of" relationship between JTS and GEOS and NTS, since the latter two also form a key component of quite a few projects. And I'm pretty sure MapGuide OS uses GEOS, as does OGR.

But it's not surprising there's a few errors and omissions - it's a pretty complicated web of relationships. And this is over only what - 8 years? I wonder what the diagram will look like in another 8...

Monday, 24 March 2008

Time for JSTS?

Like a lot of stuff, spatial functionality is moving out into the browser. Signs of the times:

Take it away, someone - I'm too busy!

- proj4js

- GeoJSON

- This comment: "The DOJO client-side libraries provide support for performing some simple spatial operations like ‘intersection’ on the client-side." (Although I haven't been able to find this functionality in a quick browse of Dojo - can anyone confirm this?)

Take it away, someone - I'm too busy!

Saturday, 22 March 2008

Swimming taught by telephone

Has Automation affected YOUR job?

Can't resist posting this (from Modern Mechanix).

I'm definitely enjoying not having grimy hands, but what happened to the shorter work week and more leisure time?

I'm definitely enjoying not having grimy hands, but what happened to the shorter work week and more leisure time?

Tuesday, 18 March 2008

World Population Cartograms

The Daily Green has some cartograms showing the change in distribution of world population since 1900.

But there's another aspect to this that the original images don't show - the increase in the absolute number of people in the world. So here's the images with that factor applied:

But there's another aspect to this that the original images don't show - the increase in the absolute number of people in the world. So here's the images with that factor applied:

2050 - ~ 9 B

A good kick in the pants for the GeoWeb?

Reading the blog posts that are starting to come out about the ESRI Dev Summit, I'm struck by a few things:

- REST! KML! JSON! AJAX! Dojo! I haven't seen this many tech buzzwords out of ESRI since ArcGIS on Windows was released with COM! VBA! DLLs! Hopefully some of these have more legs

- Clearly ESRI is embracing the GeoWeb concept with a vengeance. It will be very interesting to see how these new modes of access to GIS functionality plays out. To what extent will the marriage of web services and GIS processing be an effective model?

- They also seem to be accepting Google Maps/Earth and MS VE as valid spatial delivery platforms. It sounds like there is some pretty full-featured capabilities to deliver data to these platforms. It's going to be interesting to see the uptake on these capabilities, and whether ESRI's heart is really in making these platforms perform to their full capability. No doubt there will be some fascinating business implications as well that get played out over time...

- I'll be interested to see whether ESRI's "legitimizing" spatial-via-web-service will have any effect on the OGC W*S world. It seems to me that while OGC was early out of the gate with a web service suite, they seem to be wallowing in the doldrums as far as making the specs effective for real-world use. The OGC W*S suite was quickly adopted by the OSS world, and for good reason - open source tends to be very faithful to open standards, since a design goal is usually to have a high degree of interoperability. Not to mention that it's easier to code to a standard which has already done a lot of the hard thinking. But - the cool kids are losing interest in the crusty old W*S interfaces, since they're not keeping up with the rapid emergence of exciting new web paradigms.

- Ultimately ESRI's trailblazing will be a good thing for OSS technologies. Nothing like having a working system to inspect, copy, and improve. And the more open, standard technologies that system uses, the easier it is to steal - um, learn from. Also, as the GIS stack gets more open interface technologies accepted, it becomes easier to plug in heterogeneous components into that stack.

Monday, 17 March 2008

Tired of those boring old conformal map projections? Make your own!

Flex Projector is an interesting tool that allows you to make a custom map projection by adjusting the location and shape of meridians and parallels.

Here's a nice projection I defined to maximise the area of tropical vacation spots 8^)

The downside of this app is that as far as I can tell, there's no way to export a projection once it's defined. Instead, you have to use the Flex Projector application to reproject datasets. So it doesn't look like you can make your own custom projection for use in say, PostGIS or OGR.

I guess it might be asking a little much for the app to spit out some custom Proj4 C code with an associated EPSG code and CRS WKT... But hey, it's an open source app, so come on someone - how about it?

Here's a nice projection I defined to maximise the area of tropical vacation spots 8^)

The downside of this app is that as far as I can tell, there's no way to export a projection once it's defined. Instead, you have to use the Flex Projector application to reproject datasets. So it doesn't look like you can make your own custom projection for use in say, PostGIS or OGR.

I guess it might be asking a little much for the app to spit out some custom Proj4 C code with an associated EPSG code and CRS WKT... But hey, it's an open source app, so come on someone - how about it?

Friday, 14 March 2008

Quote of the (Pi) Day

Sir, I send a rhyme excelling

In sacred truth and rigid spelling

Numerical sprites elucidate

For me the lexicon's full weight

Maybe not the greatest lyric poem - but list the number of letters in each word. (If you prefer a shortcut this site has them already listed - along with 9, 979 more)

And notice the value of the date as MM.DD...

In sacred truth and rigid spelling

Numerical sprites elucidate

For me the lexicon's full weight

Maybe not the greatest lyric poem - but list the number of letters in each word. (If you prefer a shortcut this site has them already listed - along with 9, 979 more)

And notice the value of the date as MM.DD...

Monday, 3 March 2008

Branch-and-Bound algorithms for Nearest Neighbour queries

This paper by Roussopoulos et al is a fine expositions of how to use a Branch-and-Bound algorithm in conjunction with an R-tree index to efficiently perform Nearest-Neighbour queries on a spatial database.

Nearest-neighbour is a pretty standard query for spatial database. Oracle Spatial offers this capability natively. It would be great if PostGIS did too. If the GIST index API offers appropriate access methods, it seems like it might not be too hard to implement the Roussopoulos algorithm. (How about it, Paul, now that you're dedicating your life to becoming a PostGIS coding wizard...)

I'm also thinking that this approach might produce an efficient algorithm for computing distance between Geometrys for JTS. It's always bugged me that the JTS distance algorithm is just plain ol' O(N^2) brute-force. It seems like there has to be a better way, but as usual there seems to be precious little prior art out there in web-land. This should also work well in the "Prepared" paradigm, which means that it will provide an efficient implementation for PostGIS as well. Soon, hopefully...

Nearest-neighbour is a pretty standard query for spatial database. Oracle Spatial offers this capability natively. It would be great if PostGIS did too. If the GIST index API offers appropriate access methods, it seems like it might not be too hard to implement the Roussopoulos algorithm. (How about it, Paul, now that you're dedicating your life to becoming a PostGIS coding wizard...)

I'm also thinking that this approach might produce an efficient algorithm for computing distance between Geometrys for JTS. It's always bugged me that the JTS distance algorithm is just plain ol' O(N^2) brute-force. It seems like there has to be a better way, but as usual there seems to be precious little prior art out there in web-land. This should also work well in the "Prepared" paradigm, which means that it will provide an efficient implementation for PostGIS as well. Soon, hopefully...

Sunday, 17 February 2008

The Four Programmers

Here's an amusing adaptation of Monty Python's Four Yorkshiremen sketch...

I feel increasingly like this myself (more so now that I'm in a company where I am the oldest employee)...

When I started coding we had to type out our programs on punchcards. If you made one typo you had to retype the entire card, so to avoid mistakes we wrote out our code on paper pads with 80-column grids. You had to stand in line to submit your card stack to the guy running the card reader, and then pick up your output once it had been separated and filed by some other guy. And if you had a syntax error - back to the cardpunch to do it all over again!

We thought we'd died and gone to heaven when we got online accounts and were allowed to use a hardcopy DecWriter terminal. It even had an APL character set (don't forget to change the charset when you switched back to FORTRAN). This was better - we could "erase" (actually just strikeout) and change mistakes on the line. But forget about printing out your entire program - at 300 baud this could take many minutes, which was all chewing up your connect time allocation.

But we had it lucky.. I worked with a guy who started out coding accounting routines for a magnetic drum-based system in the early 60's. A big chunk of their time was spent reordering the individual instructions around the drum to reduce read latency. Fun stuff!

And he was lucky compared to Don Booth, who was at the oceanographic research centre where I worked during my undergrad days. He was one of the pioneers of computing in Britain. All they had for storage was a 1000-word mercury delay tube... and no doubt they spent their coding time plugging wires and changing tubes.

But you try and tell kids with their touch-sensitive palmtops that these days, and they won't believe you...

I feel increasingly like this myself (more so now that I'm in a company where I am the oldest employee)...

When I started coding we had to type out our programs on punchcards. If you made one typo you had to retype the entire card, so to avoid mistakes we wrote out our code on paper pads with 80-column grids. You had to stand in line to submit your card stack to the guy running the card reader, and then pick up your output once it had been separated and filed by some other guy. And if you had a syntax error - back to the cardpunch to do it all over again!

We thought we'd died and gone to heaven when we got online accounts and were allowed to use a hardcopy DecWriter terminal. It even had an APL character set (don't forget to change the charset when you switched back to FORTRAN). This was better - we could "erase" (actually just strikeout) and change mistakes on the line. But forget about printing out your entire program - at 300 baud this could take many minutes, which was all chewing up your connect time allocation.

But we had it lucky.. I worked with a guy who started out coding accounting routines for a magnetic drum-based system in the early 60's. A big chunk of their time was spent reordering the individual instructions around the drum to reduce read latency. Fun stuff!

And he was lucky compared to Don Booth, who was at the oceanographic research centre where I worked during my undergrad days. He was one of the pioneers of computing in Britain. All they had for storage was a 1000-word mercury delay tube... and no doubt they spent their coding time plugging wires and changing tubes.

But you try and tell kids with their touch-sensitive palmtops that these days, and they won't believe you...

Wednesday, 30 January 2008

The End of an Architectural Era?

Stonebraker et al have an interesting paper pointing out the antiquity and consequent limitations of the classic relational database architecture in today's world of massive disk/cycles/core.

If they are correct (and in spite of the recent MapReduce blunder Stonebraker has made a lot of great calls in the DB world), the world of data management is going to get awfully interesting in the coming years. The DBA's & DA's of this world have been living a relatively comfortable existence compared to those who are wandering across the stormy badlands of the middle tier. But Stonebraker postulates at least 5 radically variant database architectures to address specific use cases of data management. This would seem to lead to a much more complex world for data architects. But maybe a windfall for the consultants who find their niche?

If they are correct (and in spite of the recent MapReduce blunder Stonebraker has made a lot of great calls in the DB world), the world of data management is going to get awfully interesting in the coming years. The DBA's & DA's of this world have been living a relatively comfortable existence compared to those who are wandering across the stormy badlands of the middle tier. But Stonebraker postulates at least 5 radically variant database architectures to address specific use cases of data management. This would seem to lead to a much more complex world for data architects. But maybe a windfall for the consultants who find their niche?

Sunday, 20 January 2008

Quote of the Day

Java - learn once, write anywhere

"From my personal experience, that is quite true. I started programming in Java in 1997, and since then I have built web applications, database applications, mobile applications for Palm and Pocket PC, an application server for CTI applications, CORBA-based distributed applications, network management applications, server-side business components for application servers, fat-client business applications, an IDE, a Rich Client Platform, a modeling tool among other kinds of software. All that across different operating systems and using similar products by different vendors.

Of course I had to learn something new for each kind of "application style", but the programming language and core class library were always there for me. That is a huge thing. Before Java, it was really hard to move from one domain to another, or even move from vendor to vendor. Sun has my eternal gratitude for bringing some sanity to the software development industry."

- Rafael Chavez

"From my personal experience, that is quite true. I started programming in Java in 1997, and since then I have built web applications, database applications, mobile applications for Palm and Pocket PC, an application server for CTI applications, CORBA-based distributed applications, network management applications, server-side business components for application servers, fat-client business applications, an IDE, a Rich Client Platform, a modeling tool among other kinds of software. All that across different operating systems and using similar products by different vendors.

Of course I had to learn something new for each kind of "application style", but the programming language and core class library were always there for me. That is a huge thing. Before Java, it was really hard to move from one domain to another, or even move from vendor to vendor. Sun has my eternal gratitude for bringing some sanity to the software development industry."

- Rafael Chavez

The Paradoxically Poor Power of Visual Programming

I've just been reading about TextUML, which is a language and IDE for textually defining UML diagrams by a start-up company called abstratttechnologies.

This seems like both a stroke of brilliance and painfully obvious. It makes me wonder why UML isn't already defined in terms of a serializable language. The big advantage of this I think would be the ability to have a clearly defined semantics. In the past when reading about UML I've been quite frustrated by an apparent lack of rigour in the definition of the various UML concepts and diagrams. It's always seemed to me that a metalanguage like UML is more in need of fully-defined semantics than application languages. Most (all?) application languages after all are executable, which gives provides a final authority for the meaning of a program written in them - run it and see what happens. AFAIK UML isn't really (or not conveniently) executable in the same way.

Which brings me to the paradox promised in the title of this post. One of the claims for TextUML is that it is easier to write and express complex models in than a purely diagram-based UML tool. I can easily believe this - developing a complex model by diagrams seems cumbersome and limited compared to writing a textual description. And I think this generalizes to the concept of visual programming in general. We've had easy access to powerful graphics capabilities for over 20 years now, culminating in today's basically unlimited in graphical capabilities. In spite of this, text-base programming still rules the roost when it comes to expressiveness, flexibility and speed.

So the paradox is: why is a 1-dimensional representation more powerful than a 2-dimensional representation? To put this another way, why does a 1D representation seem less "flat" than a 2D representation?

For further study: would the same apply to a 3D representation? Or would a move to 3D jump out of some sort of planar well, and end up being more expressive than 1D?

By the way, abstratt is right here in l'il ol' Victoria, and the lead designer (and CEO) Rafael Chavez is giving a talk at VicJUG in March. I'm definitely going to attend - I suspect he may have an interesting take on this issue.

This seems like both a stroke of brilliance and painfully obvious. It makes me wonder why UML isn't already defined in terms of a serializable language. The big advantage of this I think would be the ability to have a clearly defined semantics. In the past when reading about UML I've been quite frustrated by an apparent lack of rigour in the definition of the various UML concepts and diagrams. It's always seemed to me that a metalanguage like UML is more in need of fully-defined semantics than application languages. Most (all?) application languages after all are executable, which gives provides a final authority for the meaning of a program written in them - run it and see what happens. AFAIK UML isn't really (or not conveniently) executable in the same way.

Which brings me to the paradox promised in the title of this post. One of the claims for TextUML is that it is easier to write and express complex models in than a purely diagram-based UML tool. I can easily believe this - developing a complex model by diagrams seems cumbersome and limited compared to writing a textual description. And I think this generalizes to the concept of visual programming in general. We've had easy access to powerful graphics capabilities for over 20 years now, culminating in today's basically unlimited in graphical capabilities. In spite of this, text-base programming still rules the roost when it comes to expressiveness, flexibility and speed.

So the paradox is: why is a 1-dimensional representation more powerful than a 2-dimensional representation? To put this another way, why does a 1D representation seem less "flat" than a 2D representation?

For further study: would the same apply to a 3D representation? Or would a move to 3D jump out of some sort of planar well, and end up being more expressive than 1D?

By the way, abstratt is right here in l'il ol' Victoria, and the lead designer (and CEO) Rafael Chavez is giving a talk at VicJUG in March. I'm definitely going to attend - I suspect he may have an interesting take on this issue.