By far the most difficult code in JTS to develop has been the buffer algorithm. It took a lot of hard graft of thinking, coding and testing to achieve a solid level of robustness and functionality. There have been a couple of iterations of improvements since the first version shipped, but the main features of the algorithm have been pretty stable.

However, it was always clear that the performance and memory-usage characteristics left something to be desired. This is particularly evident in cases which involve large buffer distances and/or complex geometry. These shortcomings are even more apparent in GEOS, which is less efficient at computation involving large amounts of memory allocation.

I'm pleased to say that after a few years of gestating ideas (really! - at least on and off) about how to improve the buffer algorithm, I've finally been able to implement some enhancements which address these problems. In fact, they provide

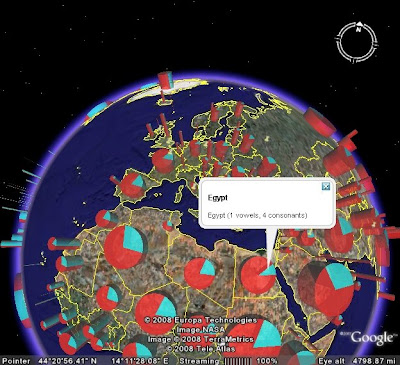

dramatically better performance in the above situations. As an example, here are timing comparisons between JTS 1.9 and the new code (the input data is 50 polygons for African countries, in lat-long):

Buffer

Distance JTS 1.9 JTS 1.10

0.01 359 ms 406 ms

0.1 1094 ms 594 ms

1.0 16.453 s 2484 ms

10.0 217.578 s 3656 ms

100.0 728.297 s 250 ms

1000.0 1661.109 s 313 ms

Another tester reports that a buffering task which took

83 sec with JTS 1.9 now takes

2 sec with the new code.

But wait, there's more! In addition to the much better performance of the new algorithm, the timings reveal a further benefit - once the buffer distance gets over a certain size (relative to the input), the execution time actually gets

faster. (In fact, this is as it should be - as buffer distances get very large, the shape of the input geometry has less and less effect on the shape of the buffer curve.)

The algorithm improvements which have made such a difference are:

- Improved offset curve geometry - to avoid some nasty issues arising from arc discretization, the original buffer code used some fairly conservative heuristics. These have been fine-tuned to produce a curve which allows more efficent computation, while still maintaining fidelity of the buffer result

- Simplification of input - for large buffer distances, small concavities in the input geometry don't affect the resulting buffer to a significant degree. Removing these in a way which preserves buffer distance accuracy (within tolerance) gives a big improvement in performance.

A nice side effect of this work is the development of a solid methodology for validating buffers, and a thorough test suite for correctness, robustness, and performance.

This code will appear in JTS 1.10 Real Soon Now.