In a previous post I described how the JTS Topology Suite operation IsSimpleOp has been completely rewritten to reduce code dependencies, improve performance, and provide a simpler, more understandable implementation. The post points out that the IsValidOp implementation would benefit from the same treatment. This work has now been carried out, with similar benefits achieved.

The original IsValidOp code used the GeometryGraph framework to represent the topology of geometries. This code reuse reduced development effort, but it carried some serious drawbacks:

- The GeometryGraph structure was used for many JTS operations, including overlay, buffer, and spatial predicate evaluation. This made the code complex, hard to understand, and difficult to maintain and enhance

- The GeometryGraph structure computes the full topology graph of the input geometry, in order to support constructive operations such as overlay and buffer. This makes it slower and more subject to robustness problems. Non-constructive operations such as IsValidOp and IsSimpleOp can compute topology "on-the-fly", which allows short-circuiting processing as soon as an invalidity is found.

The new

IsValidOp implementation is more self-contained, relying only on the

noding framework and some basic spatial predicates. Using the

MCIndexNoder allows short-circuited detection of some kinds of invalidity, which improves performance. And it will be easy to switch to a different noding strategy should a superior one arise.

However, dropping

GeometryGraph means that the topology information required to confirm validity needs to be computed some other way. The goal is to compute just enough topological structure to evaluate validity, and do that efficiently on an as-needed basis. This required some deep thinking about validity to pare the topology information required down to a minimum. This was assisted by the

extensive set of unit tests for IsValidOp, which ensured that all possible situations were handled by the new logic (although there was an untested situation which uncovered a

bug late in development.) The resulting algorithm uses some elegant logic and data structures, which are explained in detail below.

OGC Validity

JTS implements the

OGC Simple Features geometry model. To understand the validity algorithm, it helps to review the rules of geometry validity in the OGC specification:

For non-polygonal geometry types, the validity rules are simple;

- Coordinates must contain valid numbers

- LineStrings must not be zero-length

- LinearRings must be simple (have no self-intersections)

For polygonal geometry, on the other hand, the validity rules are quite stringent (sometimes considered too much so!). The rules are:

- Rings must be simple (have no self-intersections)

- Rings must not cross, and can touch only at discrete points

- Holes must lie inside their shell

- Holes must not lie inside another hole of their polygon

- The interiors of polygons must be connected

- In a MultiPolygon, the element interiors must not intersect (so polygons cannot overlap)

- In a MultiPolygon, the element boundaries may intersect only at a finite number of points

Validity Algorithm for Polygonal Geometry

The new IsValidOp uses an entirely new algorithm to validate polygonal geometry. It has the following improvements:

- It supports short-circuiting when an invalid situation is found, to improve performance

- Spatial indexing is used in more places

- The code is simpler and more modular. This makes it easier to understand, and easier to adapt to new requirements (e.g. such as the ability to validate self-touching rings described below)

The algorithm consists of a sequence of checks for different kinds of invalid situations. The order of the checks is important, since the logic for some checks depends on previous checks passing.

1. Check Ring Intersections

The first check determines if the rings in the geometry have any invalid intersections. This includes the cases of a ring crossing itself or another ring, a ring intersecting itself or another ring in a line segment (a collinear intersection), and a ring self-touching (which is invalid in the OGC polygon model). This check can use the robust and performant machinery in the JTS noding package. If an invalid intersection is found, the algorithm can return that result immediately.

Kinds of invalid intersections:

(i) a self-touch; (ii) collinear; (iii) segment crossing; (iv) vertex crossing

This check is an essential precursor to all the remaining checks. If a geometry has no invalid intersections this confirms that its rings do not self-cross or partially overlap. This means that the results of orientation and point-in-polygon computations are reliable. It also indicates that the rings are properly nested (although it remains to be determined if the nesting of shells and holes is valid).

Intersection search is the most computationally expensive phase of the algorithm. Because of this, information about the locations where rings touch is saved for use in the final phase of checking connected interiors.

2. Check Shell and Hole nesting

If no invalid intersections are found, then the rings are properly nested. Now it is necessary to verify that shells and holes are correctly positioned relative to each other:

- A hole must lie inside its shell

- A hole may not lie inside another hole.

- In MultiPolygons, a shell may not lie inside another shell, unless the inner shell lies in a hole of the outer one.

Ring position can often be tested using a simple Point-In-Polygon test. But it can happen that the ring point tested lies on the boundary of the other ring. In fact, it is possible that

all points of a ring lie on another ring. In this case the Point-In-Polygon test is not sufficient to provide information about the relative position of the rings. Instead, the topology of the incident segments at the intersection is used to determine the relative position. Since the rings are known to not cross, it is sufficient to test whether a single segment of one ring lies in the interior or exterior of the other ring.

MultiPolygon with an element where all vertices lie on the boundary

Checking nesting of holes and shells requires comparing all interacting pairs of rings. A simple looping algorithm has quadratic complexity, which is too slow for large geometries. Instead, spatial indexes (using the

STRtree) are used to make this process performant.

3. Check Connected Interior

The final check is that the interior of each polygon is connected. In a polygon with no self-touching rings, there are two ways that interior disconnection can happen:

- a chain of one or more touching holes touches the shell at both ends, thus splitting the polygon in two

- a chain of of two or more touching holes forms a loop, thus enclosing a portion of the polygon interior

To check that these conditions do not occur, the only information needed is the set of locations where two rings touch. These induce the structure of an

undirected graph, with the rings being the graph vertices, and the touches forming the edges. The situations above correspond to the touch graph containing a

cycle (the splitting situation is a cycle because the shell ring is also a graph vertex). (Equivalently, the touch graph of a valid polygon is a

tree, or more accurately a

forest, since there may be sets of holes forming disconnected subgraphs.) So a polygon can be verified to have a connected interior by checking that its touch graph has no cycles. This is done using a simple graph traversal, detecting vertices (rings) which are visited twice.

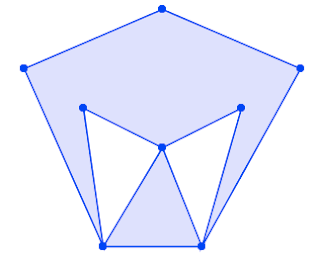

Situations causing a disconnected interior:

(i) a chain of holes; (ii) a cycle of holes

If no invalid situations are discovered during the above checks, the polygonal geometry is valid according to the OGC rules.

Validity with Self-touching Rings

Some spatial systems allow a polygon model in which "inverted shells" are valid. These are polygon shells which contain a self-touch in a way that encloses some exterior area. They also allow "exverted holes", which contain self-touches that disconnect the hole into two or more lobes. A key point is that they do not allow "exverted shells" or "inverted holes"; self-touches may disconnect the polygon exterior, but not the interior. Visually this looks like:

Left: Inverted shell and exverted hole (valid)

Right: Exverted shell and inverted hole (invalid)

This kind of topology is invalid under the OGC polygon model, which prohibits all ring self-intersections. However, the more lenient model still provides unambiguous topology, and most spatial algorithms can handle geometry of this form perfectly well. So there is a school of thought that considers the OGC model to be overly restrictive.

To support these systems JTS provides the

setSelfTouchingRingFormingHoleValid option for

IsValidOp to allow this topology model to be validated. Re-implementing this functionality in the new codebase initially presented a dilemma. It appeared that building the touch graph to check connectivity required computing the geometry of the additional rings formed by the inversions and exversions. This needed a significant amount of additional code and data structures, eliminating the simplicity of the graph-based approach.

However, it turns out that the solution is much simpler. It relies on the fact that valid self-touches can only disconnect the exterior, not the interior. This means that self-touches of inverted shells and exverted holes can be validated by the local topology at the self-intersection node. This condition can be tested by the same logic already used to test for nested touching shells. Even better, if the self-touches are valid, then the touch-graph algorithm to check connectivity still works. (This is the same phenomenon that allows most spatial algorithms to work correctly on the inverted/exverted ring model.)

Performance

As expected, the new codebase provides better performance, due to simpler code and the ability to short-circuit when an invalidity is found. Here's some performance comparisons for various datasets:

| Data | Size | New (ms) | Old (ms) | Improvement |

| world | 244 Polygons, 366K vertices | 340 | 1250 | 3.7 x |

| invalid-polys | 640 Polygons, 455K vertices | 126 | 334 | 2.6 x |

| valid-polys | 640 Polygons, 455K vertices | 244 | 487 | 2 x |

| australia | 1 MultiPolygon, 1,222K vertices | 1321 | 69026 | 52 x |

- world is the standard dataset of world country outlines

- invalid-polys is a dataset of all invalid polygons. valid-polys is the same dataset processed by GeometryFixer. The timings show the effect of short-circuiting invalidity to improve performance.

- australia is a geometry of the Australian coastline and near-shore islands, with 6,697 polygon elements. The dramatic difference in performance reflects the addition of a spatial index for checking nested shells.

Australia and 6,696 islands

Next Steps

The improved IsValidOp will appear in JTS version 1.19. It has already been ported to GEOS, providing similar performance improvements. And since GEOS backs the PostGIS ST_IsValid function, that will become faster as well.

There is one remaining use of GeometryGraph in JTS for a non-constructive operation: the RelateOp class, used to compute all topological spatial predicates. Converting it should provide the same benefits of simpler code and improved performance. It should also improve robustness, and allow more lenient handling of invalid inputs. Watch this space!