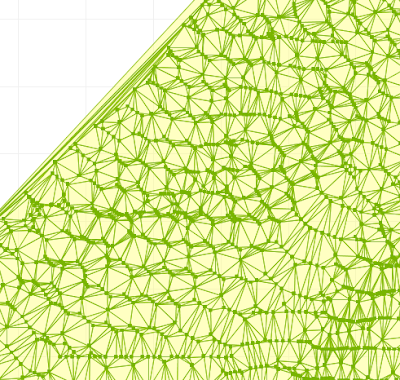

Here's a portion of the dataset which produced the problem:

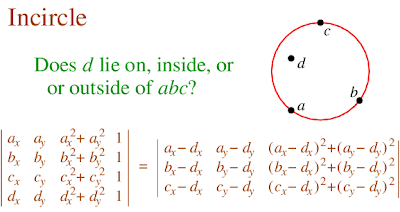

Digging into the code , it turned out (not unexpectedly) to be caused by a robustness failure in the inCircle predicate. InCircle is a key test used in Delaunay triangulation. The standard algorithm for computing it involves determining the sign of the determinant of a 4x4 matrix.

This computation is notoriously prone to robustness failure when evaluated using double-precision arithmetic. The rounding errors inherent in using double-precision can cause the sign of the determinant to be computed incorrectly. (For an good explanation of the issue see this page by Jonathan Shewchuk.)

As a concrete example, consider testing whether the point

POINT (687958.13 7460720.99)

lies in the circumcircle of the triangle

POLYGON ((687958.05 7460725.97, 687957.43 7460725.93, 687957.58 7460721, 687958.05 7460725.97)

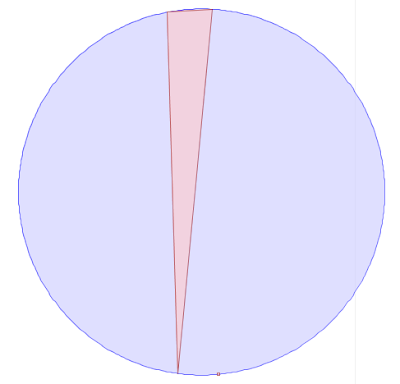

Visually this looks like:

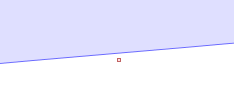

Zooming in, you can see that the query point is NOT in the circumcircle, so that InCircle()=FALSE.

However, evaluating the inCircle predicate determinant using double-precision computes InCircle()=TRUE. This is because the large magnitude of the ordinate values relative to the size of the triangle causes the significant information to be lost in the roundoff error. The effect of this error propagates through the Delaunay algorithm. Ultimately this results in a convergence failure which is detected and reported.

However, evaluating the inCircle predicate determinant using double-precision computes InCircle()=TRUE. This is because the large magnitude of the ordinate values relative to the size of the triangle causes the significant information to be lost in the roundoff error. The effect of this error propagates through the Delaunay algorithm. Ultimately this results in a convergence failure which is detected and reported.Luckily, a solution was immediately to hand, in the form of the DoubleDouble extended-precision library I ported to Java a while ago. Re-implementing the predicate evaluation using DoubleDouble computed the correct results for inCircle in all cases. This eliminated the robustness failure, and allowed the Delaunay Triangulation of the entire 288K point dataset to be computed without errors (for the record, this took 5 min 20 s).

The DoubleDouble code had been a solution in search of a problem for quite a while, so I was happy to get to prove its usefulness in a real-world situation.

And the story doesn't end there - it gets even better...

5 comments:

Hi,

Good work, Martin.

I had similar problems with my triangulation library, but I haven't get error for a long time (probably because I don't use it ;-)

Do you know if the dataset which caused failure is available for tests ?

Another point that worries me in my lib is that I do not detect 4 points strictly aligned or stricly lying on the same circle. A few month ago, I fixed some weird results I get while triangulating a regular grid, but I'm quite sure my code is not fully robust if alignment or co-circle points are found in the dataset. Do you use some tips to deal with these cases.

You may remember some benchmarking I did a few month ago with double, DoubleDouble and some Arbitrary precision libraries. I get a coeff of 40x longer with DoubleDouble than with double (and 300x with BigDecimal). Do you confirm this results or do you get better ones ?

Thanks again for your excellent work

Michaël

Hi, Michael.

Yes, the dataset was posted on the JTS list on April 11. Here's the link:

http://sourceforge.net/mailarchive/forum.php?thread_name=1270982868.2207.23.camel%40braz-laptop&forum_name=jts-topo-suite-user

I don't do anything special for 4 points aligned on a circle. I have tested this tho - the algorithm seems to be robust, but the results are non-determinate.

I did some some performance testing of the DT using DD. It was only about 20% slower, I think - so well worth the cost.

Actaully, make that 2x slower for using inCircle in DD versus double-precision.

Still preferable to failing, but also a significant time penalty. The way to reduce this would be to introduce a fast filter to decide whether DD was necessary or not. I'm not sure what this would look like, though. If anyone has any ideas I'd be glad to hear them.

hi yeah, is it possible to use delaunay triangulation maps on palm-print image for recogniion? can you please give solution for this question because i am doing my project on this .

thankyou,

prashanth

@lellela: Sorry, no idea about this.

Post a Comment